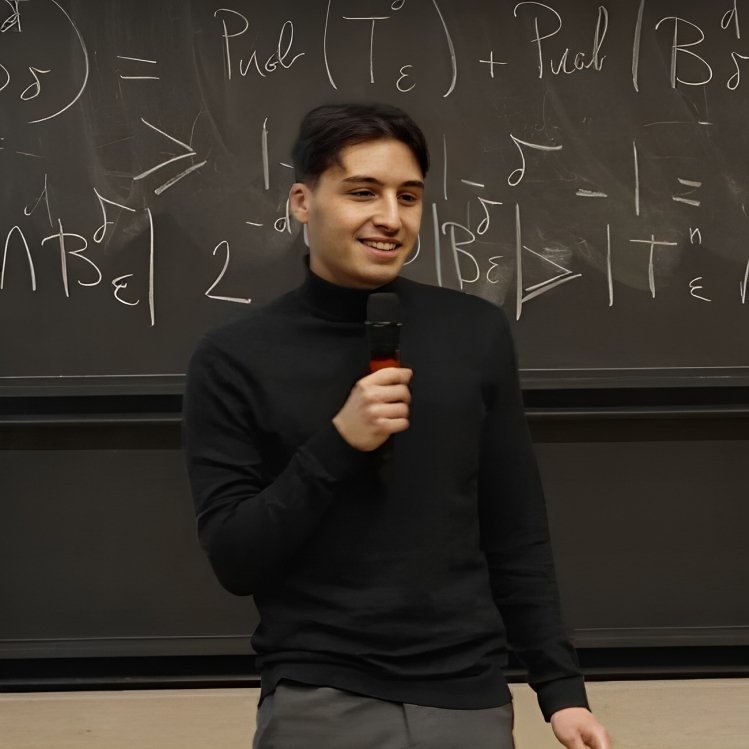

CV

Education

PhD in Computer science - University of Cambridge, Exp. 2028

PhD in Computer science - University of Cambridge, Exp. 2028

PhD on automated conjecture generation and theorem proving with AI systems.

Supervisor: Mateja Jamnik, Professor of Artificial Intelligence at the University of Cambridge.

MSc in Mathematics - Paris Cite University, 2024

MSc in Mathematics - Paris Cite University, 2024

Master’s degree in mathematical logic, with a thesis on automated theorem proving.

Grade: Incoming.

MSc in Mathematics - Sorbonne University, 2023

MSc in Mathematics - Sorbonne University, 2023

Master’s degree in machine learning, with a thesis on automated theorem proving.

Grade: First Class Honours (16.0/20).

Experience

Student Researcher - Google DeepMind, 2025

Student Researcher - Google DeepMind, 2025

Research at the intersection of AI, mathematics, computation and creativity using AlphaProof.

Supervisor: Tom Zahavy, Staff Research Scientist at Google DeepMind.

Contributor - Project Numina, since 2024

Contributor - Project Numina, since 2024

Research on neural theorem proving with Lean 4, developing the Kimina Lean Server, implementing tree search algorithms, fine-tuning LLMs and developing a reinforcement learning pipeline to train Kimina-Prover.

Supervisor: Jia Li, Co-Founder of Project Numina.

Research Intern - University of Cambridge, 2023

Research Intern - University of Cambridge, 2023

Research internship on neural theorem proving with Isabelle, implementing Monte Carlo tree search and fine-tuning LLaMA and GPT-2 as policy and value models.

Supervisor: Wenda Li, Research Associate at the University of Cambridge.

Research Intern - OpenAI, 2022

Research Intern - OpenAI, 2022

Research internship on neural theorem proving and auto-formalisation with Lean 3, formalising olympiad problems and monitoring the training of GPT‐3.5 on synthetic data with Expert Iteration.

Supervisor: Stanislas Polu, Research Engineer at OpenAI.

Publications

Target-Based Automated Conjecturing for Neural Theorem Proving

Marco Dos Santos, Albert Q. Jiang, Wenda Li, Mateja Jamnik

AI for Math Workshop at ICML 2025 (poster).

📄 Paper

Kimina-Prover Preview: Towards Large Formal Reasoning Models with Reinforcement Learning

Project Numina & Kimi Team

Preprint.

📄 Paper | 🤗 Models

Llemma: An Open Language Model For Mathematics

Zhangir Azerbayev, Hailey Schoelkopf, Keiran Paster, Marco Dos Santos, Stephen McAleer, Albert Q. Jiang, Jia Deng, Stella Biderman, Sean Welleck

ICLR 2024 (poster) and MATH-AI Workshop at NeurIPS 2023 (poster).

📄 Paper | 🤗 Model | 🤗 Dataset | Code

OpenWebMath: An Open Dataset of High-Quality Mathematical Web Text

Keiran Paster, Marco Dos Santos, Zhangir Azerbayev, Jimmy Ba

ICLR 2024 (poster) and MATH-AI Workshop at NeurIPS 2023 (oral & poster).

📄 Paper | 🤗 Dataset | Code

Research impact

Kimina-Prover is the first large reasoning model applied to theorem proving. It pushed the state-of-the-art on miniF2F (from 71% to 81% for the Preview version, and from 89% to 92% for the final version) and PutnamBench. The model has been used by:

- DeepSeek as the main baseline to compare their DeepSeek-Prover-V2 model;

- ByteDance Seed as a baseline and inspiration for their Seed-Prover model.

Llemma has been cited over 300 times. It was the best open-source model in mathematics when it was released, and it has been downloaded more than 300k times on Hugging Face. The model and its training dataset have been used by:

- Mistral AI to evaluate the long context performance of their Mixtral of Experts model on the Proof-Pile-2 dataset;

- DeepSeek as a baseline to compare their DeepSeekMath model;

- The Qwen team from Alibaba Group as an inspiration to train their Qwen2.5-Math models;

- several academic researchers as a baseline and inspiration for their models (e.g., MetaMath and TinyLlama).

OpenWebMath has been cited over 70 times. It was the #1 trending dataset on Hugging Face when it was released. The dataset has been used by:

- DeepSeek to build the continued pre-training dataset for DeepSeekMath and DeepSeek-Prover models;

- Microsoft to continuously pre-train their Rho-1 model;

- The Kimi team from Moonshot AI to train their Kimi k1.5 model;

- several academic researchers to build reasoning models (e.g., Quiet-STaR by Stanford researchers).

Awards and honours

EleutherAI Scholarship, 2024

Awarded a scholarship by EleutherAI to fully support my PhD on AI for mathematics.